As professionals working with children with complex disabilities, we have seen how eye gaze technology has changed the lives of many of our students. When severe physical limitations prevent successful or efficient use of touch screens or switch scanning, eye gaze is often a solution, and we have seen many of our students finally able to make their needs and thoughts known. They can communicate, participate in lessons and play computer games. Eye Gaze technology has not only opened the world for children living with complex disabilities allowing them to develop new skills, but it has also allowed people with progressive disorders such as ALS to continue to be active participants in their lives for longer periods.

iOS devices also have a significant impact on people with disabilities. The portability, intuitive interface, multitude of apps, and accessibility features make them a favorite device both for individuals with disabilities and for professionals working with them. However, as eye gaze technology for Windows-based devices steadily improves, and the variety of eye-gaze compatible apps and software increases, the technology has remained frustratingly elusive for iOS devices.

Finally, that may be changing!

In iOS11 Apple introduced ARKit allowing for augmented reality (AR) capabilities. In 2018, Apple introduced the TrueDepth camera system in the front facing camera (present in the newest iOS devices – from iPhone X and iPad Pro 2018), with components that capture 3D information. Beyond their use for face recognition for device security and fun photo apps, the combination of these two technologies allows apps to use eye and face tracking.

Inspired by this recent blogpost by Tech Owl AAC Community that highlights some of the recent apps using this technology, we downloaded a couple of them to a colleague’s iPhone X and tried them out for ourselves to get a sense of how they work.

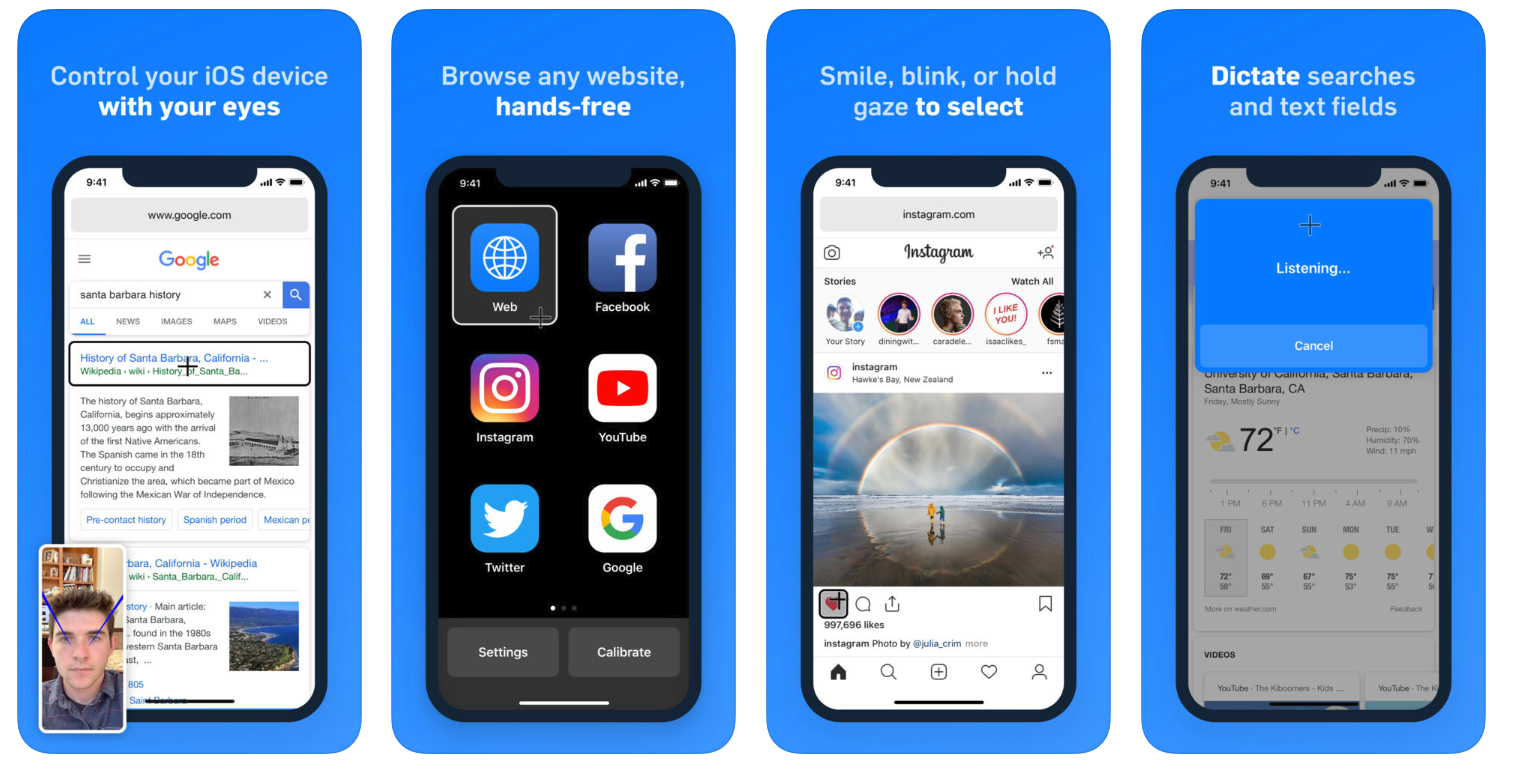

Hawkeye Access (free) uses eye gaze technology to allow users to browse the web and navigate websites. It has a simple 5-point calibration system and settings that allow for customized sensitivity of the controls. Selection options include blink, stare, and smile. Favorite sites can be accessed directly from within the app homepage. Search within the apps themselves uses speech recognition, so users require clear speech to use it effectively. Navigation controls are located at the edges of the screen. For example, to scroll down, the user looks at the bottom edge and a scroll button appears indicating that you are selecting to scroll down. Holding the gaze for set time (adjusted in settings) selects the button and the page scrolls down. To return to the home page, the user looks to the bottom right corner and a home button appears. Items and boxes within the apps are selected the same way, and selectable area of the screen are indicated by a highlighted box.

Hawkeye Access (free) uses eye gaze technology to allow users to browse the web and navigate websites. It has a simple 5-point calibration system and settings that allow for customized sensitivity of the controls. Selection options include blink, stare, and smile. Favorite sites can be accessed directly from within the app homepage. Search within the apps themselves uses speech recognition, so users require clear speech to use it effectively. Navigation controls are located at the edges of the screen. For example, to scroll down, the user looks at the bottom edge and a scroll button appears indicating that you are selecting to scroll down. Holding the gaze for set time (adjusted in settings) selects the button and the page scrolls down. To return to the home page, the user looks to the bottom right corner and a home button appears. Items and boxes within the apps are selected the same way, and selectable area of the screen are indicated by a highlighted box.

It took a little getting used to, but within a few minutes, we were able to access the apps and start browsing. Some of the controls were more difficult than others, for example, selecting a video in YouTube proved easier to select by choosing from the icons that popped up in our history, as opposed to searching through the search box. The search button is small and located close to the edge, so it was difficult to “grab”. Experience on a larger screen, such as the iPad Pro, may prove different, and of course, with more practice selection control would likely improve. This app has definite potential for users with motor impairments and will no doubt improve with future updates.

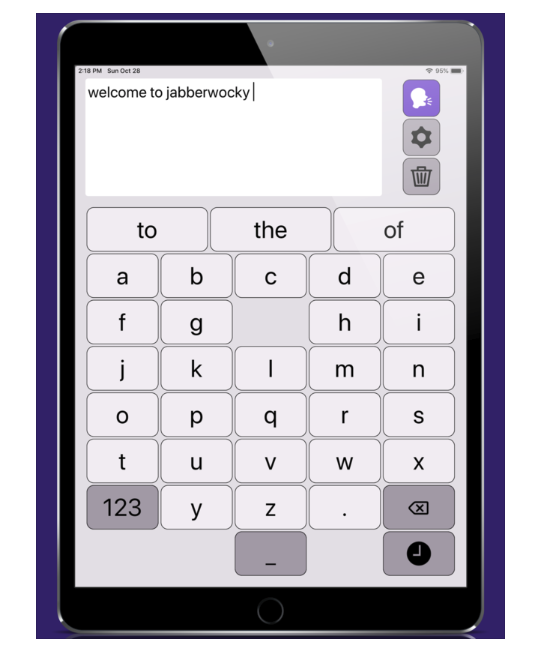

Jabberwocky Hands-Free AAC (30-day free trial period, after which a subscription is available for purchase.)

Jabberwocky Hands-Free AAC (30-day free trial period, after which a subscription is available for purchase.)

Jabberwocky is an augmentative and alternative communication (AAC) app that tracks head movements to allow users to type messages on the screen which can then be read aloud. It has a simple and esthetic design and was relatively easy to use. It took a few minutes to get used to the sensitivity of the head movements, but there are settings to adjust for cursor speed and sensitivity to movement. It will be interesting to have the opportunity to try this out on a larger screen.

The calibration was quick and easy to use. The keyboard offers predictive text, allowing for more efficient typing and the user can easily access recent/frequent/and favorite message lists from the side menu. Menu icons are simply designed and easily accessed. Settings allow for adjusting sensitivity.

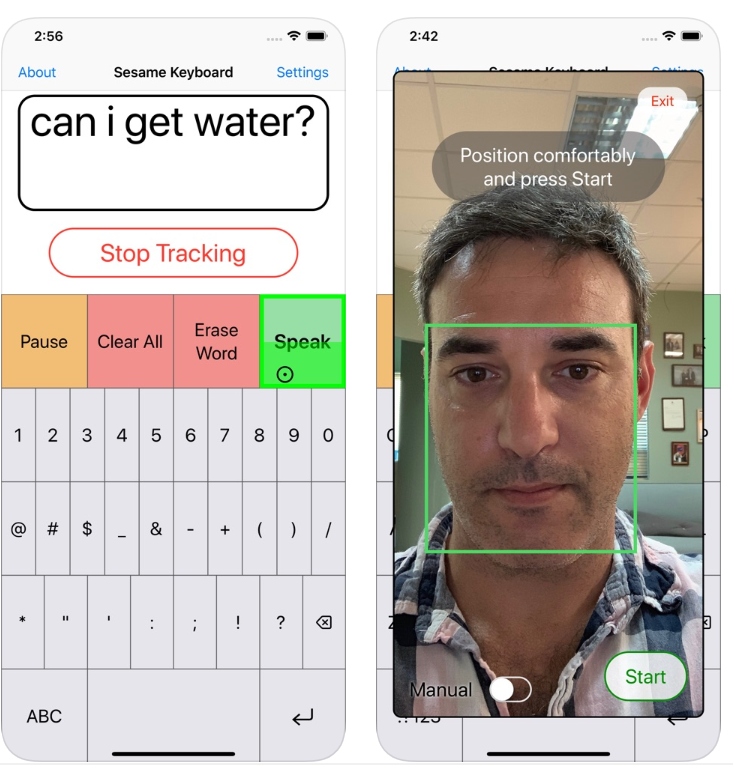

Sesame Talking Keyboard. (iOS). Sesame Enable is a start-up well known in the world of accessibility for their app Open Sesame!, a touch free head tracking program that allows users to control their Android devices with just head movements. In September 2019, they released a new app, Sesame Talking Keyboard, for iOS devices. This app allows users to use head tracking to type messages which can then be read aloud.

Sesame Talking Keyboard. (iOS). Sesame Enable is a start-up well known in the world of accessibility for their app Open Sesame!, a touch free head tracking program that allows users to control their Android devices with just head movements. In September 2019, they released a new app, Sesame Talking Keyboard, for iOS devices. This app allows users to use head tracking to type messages which can then be read aloud.

The app interface is clean with large buttons. Calibration is easy and the app is simple to use. Sesame Talking Keyboard free and supports devices with iOS 9 and above.

TouchChat, a popular app for AAC, just recently announced that they are releasing a public beta version of head pointing in their app, that will be released in early March, available as an update to all current users. The feature will not require any calibration and will be available in the app settings after the update. Here is a video demonstrating how it will work:

These are definitely exciting developments and we can’t wait to see what other apps arrive making use of this technology as it advances! We’d love to hear about your experiences with these features and apps, so let us know how it goes!

Thanks for including us in this blog post! I wanted to let you know we’ve also recently launched Jabberwocky Hands-Free Browser on the App Store. It’s a web browser controlled hands-free using head movement and speech.

https://itunes.apple.com/us/app/jabberwocky/id1455137144?mt=8